Solo: A Star Wars Story. Everyone’s going to see it; it’s a philosophical requirement at this point for Star Wars franchise fans. An inane difficulty even exists in writing about a film series so widespread because the film series is absolutely timeless and the special effects were so cutting edge at their time that the excitement persists into 2018. Trying to out-invent the camera work, lighting, and film mastery created in the entire Star Wars franchise, let alone the inventive work from the original trilogy (Episodes 4, 5, and 6: A New Hope (1977), The Empire Strikes Back (1980) and Return of the Jedi (1983) has proven to be one of our industry’s most powerful challenges.

Technically, this movie not only tells the story of a young Han Solo and his journey, but it continued to innovate some of the commonplace cinematic conventions, like green screens and chroma keying of the worlds in the background. Green screen work is expected in a movie that takes place in outer space, because we obviously can’t do location shoots in “galaxies far, far away,” but Solo: A Star Wars Story takes the suspension of disbelief created from the background worlds to an entirely new technical level.

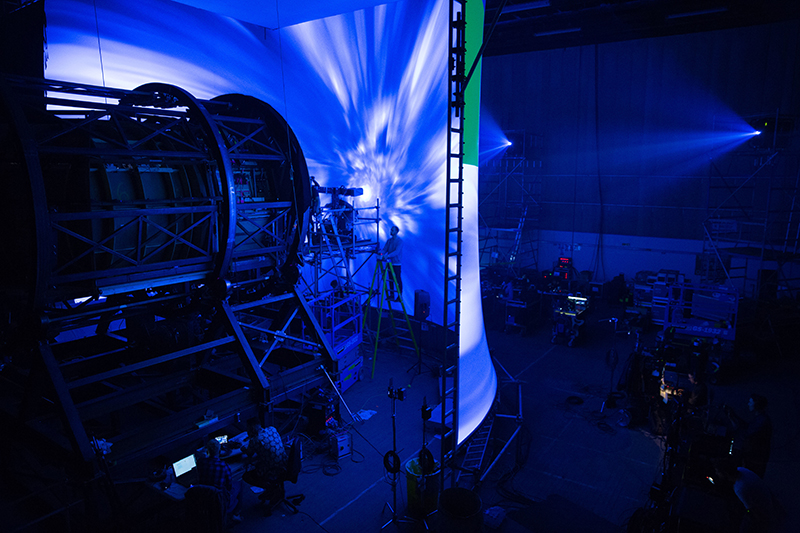

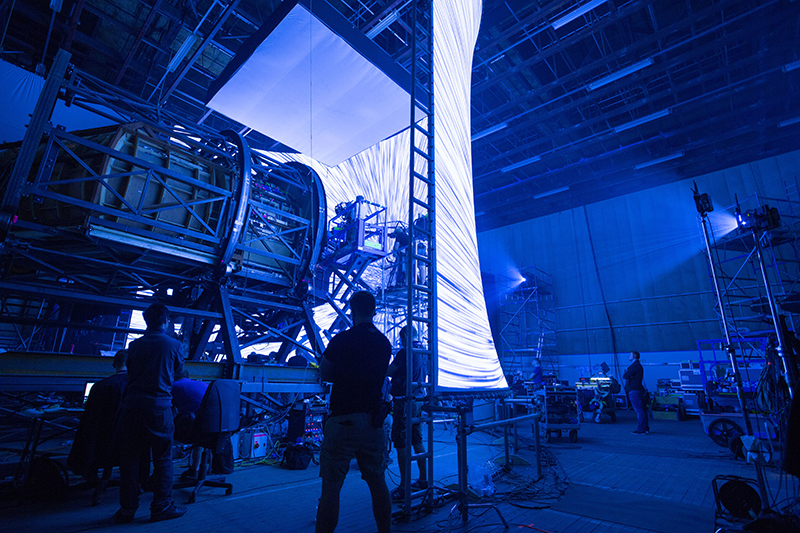

Instead of filming some of the scenes and color keying the heavens into the background, the heavens were projected onto canvases, and LED walls were used to make the best representation of the actual light an event would create, on actors and in reflected surfaces. How does one capture the actual light that jumping to lightspeed would create, or what the explosions from an energy weapon-induced explosion might look like? This movie is visually stunning to an order of magnitude of mastery. In addition to the work the visual samurai at Lucasfilm did to make Solo: A Star Wars Story, there’s a company in Los Angeles that has been innovating the way filmmakers are able to give life to the world in the background. Meet Lux Machina, and the idea of extremely high definition projected backgrounds and LED wall-induced environmental lighting.

A Chat with Lux Machina’s Phil Galler

I had a conversation with one of the principals of Lux Machina, Phil Galler, on what kinds of experiences they had on the set of Solo: A Star Wars Story and what types of innovations the company brought to the film. You’ve seen Lux Machina’s work before, on broadcast shows like the American Music Awards, the Country Music Awards, the Golden Globes, and one of my favorites, the movie Oblivion with Tom Cruise, Morgan Freeman, and Olga Kurylenko, et al. The sky tower work, all of that gorgeous scenery of a residence above the clouds and all of the reflections that happened in every reflective surface of that set were created largely in part from the methodology that Lux Machina brought to that production, alongside VER/PRG. Tom Cruise said that having those projections in the environment “gave the film a very epic feeling.”

PLSN: Tell me a little bit about what Lux Machina has done with the Solo project.

We’ve got a pretty close relationship with Lucasfilm, so we’ve been working on R&D stuff for quite a while, and in the process of doing so, we were invited into Rogue One and then into Solo. For Solo, the original goal was doing really large format projection setup for a “space yacht,” you could call it — and in doing that, the rest of the group, and in particular, visual effects supervisor Rob Bredow, also wanted to explore the possibility of replacing the projection with the LED work they were doing for the cockpit and other spaceship work. We ended up setting up a projection rig to also handle the Falcon cockpit. We also did some interactive LED lighting, surrounding a vehicle or set with some low-res LED and driving some plates through it, and getting the interactive lighting from that setup. We also did that for the Falcon, all of the speeder work, and a handful of other ships like that. The main three key sets were the Speeder chase and the LEDs, the large

projections for the space yacht, and the high-res projection and camera work on the Falcon.

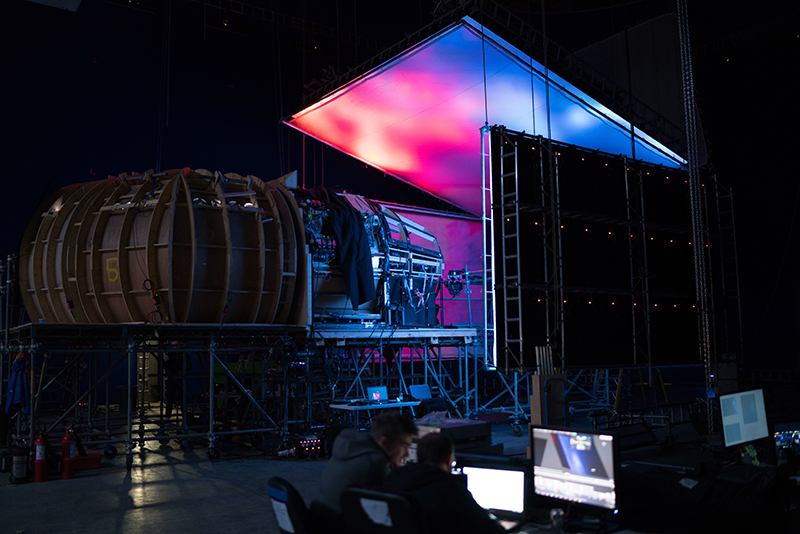

The technology — is it a mix of high resolution projection and LED wall together?

We never mix the technologies on the same set, it’s always one or the other. For example, there’s a version of the cockpit where we did everything in this high-res projection, then we took all the projectors down. There was a point when the production rewrote part of the script, and they had to go back and get dialogue, so we replicated the lighting from the projection on LED screens that we had already hung to do the speeder work. The LEDs are never in camera, it’s just the interactive lighting work from the screens themselves.”

Can you explain how the LED reflection work that you do plays into the lighting of the film?

So the ultimate goal of the projections was to get really crisp high-res reflections and lighting, but to also capture all of that in-camera, which is why we went with the projections. It’s so difficult to pull a green screen off of an LED wall at the quality level ILM needs. If you punch in an LED wall when you are using a very high-resolution camera, like a 6K camera, for example, you can still see the pixels of the wall, even if you’re 40 feet away. If you’re trying to do any kind of rotoscope work around anything with frizzy hair on a LED wall, it’s incredibly difficult. So, we headed back to projection. We actually had 4K projection for the Falcon cockpit work.

Essentially, the work you’re doing can replace the green screen process work? Can you speak a little on how the chiefs and principals are looking at the replacement of the green screen work and the work that Lux Machina is doing?

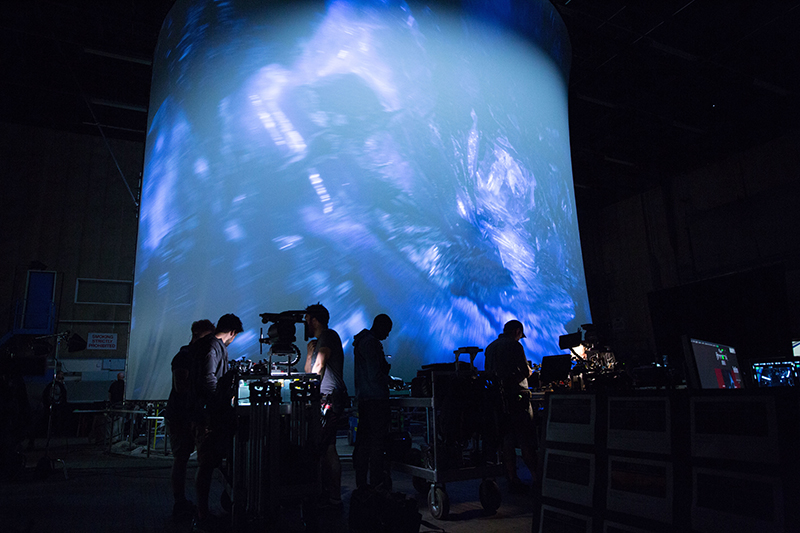

I think the general feedback is that when the technology is in sync with the cameras, when it is all programmed correctly, and when all the complex boxes are checked, it looks great. It works really well, the lighting is phenomenal, and it’s something they wouldn’t be able to get or do with the green screen process. Also, the immersion for the actors is significantly better than anything that has come before it. Take the Falcon cockpit scene for example: it’s surrounded by 180-degree screen, 11 feet from the front of the cockpit, and it encapsulated the entire cockpit. When you were sitting inside there and it goes to hyperspace, that’s all you saw — there was no break from the immersion. It was almost like being on a ride. Because of that, it’s a lot easier for the actor to be able to tell a story because they feel like they are in the place they’re supposed to be in, surrounded by the environment in which the story they’re telling exists.

At the core of it, I think that was really one of the best value adds — you’ve got the lighting, you’ve got the projection which looks outstanding, but for actors to sit in an imaginary part of space on a green screen set and have a conversation is a little bit more abstract than, say, a scene taking place on an actual mountain where the visual field for the performer is more realistic. Because we’re able to provide that environment, I think it added to the huge wow factor and helped rebirth the Falcon, but also helped the actors better tell the story. There were a lot of techniques we use to improve the actor’s quality of life, like providing eye lines in camera — places for them to look — with content that really helped with the environmental immersion that they wouldn’t get any other way.

Back end question, who provided the production for the filming?

Our projectors and lead projectionists came from Sweetwater, but the LED came from VER UK. Projectors were a considerably larger part of the overall production budget for us than the LED wall was. The project used all brand new Panasonic 30K laser projectors, and we provided the servers. We actually did two iterations of the large format projection — one on the main deck, which was like the first floor, and one on the upper deck, which we did a few weeks later. The main deck used 15 of the HD projectors, and the upper deck used 11. When we did the Falcon, we used five of the 4K projectors for that environment. For the Space Yacht scenes, we used all 0.36 lenses, so all of the new snorkel lenses. We’re actually the production company for our portion of the project; the client is our client.

We worked directly with Industrial Light and Magic, and provided everything from developing content templates, designing the screens, previsualization of the screens, and then working with the actual production side with respect to getting labor, installation, and getting everything in place. We came in with two or three people, two programmers, and a few project managers. It was quite an undertaking, and the work really paid off in the visual fidelity — totally worth it.”

Our use of disguise media servers played a large part in the playback side of the production. We used quite a few d3 4x4xs and gx2s, TouchDesigner and Notch, to build some really nifty real-time controls, and we got a lot of support from disguise and their team along the way.

Tell me a bit about how you handled the pre-viz environment. How did you visualize all of this work with the client?

Industrial Light and Magic — ILM — does all of their work inside Maya, so we did all of our work inside Maya. How we worked this was that we’d start inside Vectorworks and come up with a general idea — we would pass Vectorworks drawings back and forth as well with ILM — production for Vectorworks drawings, ILM for Maya scenes. The Space Yacht, for example, was all Vectorworks because the way the shots were set up, it was less important that the screen have full coverage because of the nature of the set and the distance from the screen to the set was a little bit larger. That particular screen setup was done in Vectorworks, with the screen mapping portions done in both disguise, Mapping Matter and Vectorworks.

We’re very traditional with respect to the math, which we do by hand the first time to make sure it’s right. We always calculate every projector placement and its beam information before we try to drop it into anything, just so we have a real groundwork for it and we’re not replying on anyone else’s calculations. The cockpit work for the Falcon was a bit more intense — ILM did tech viz in Maya, and we passed Maya files back and forth. We did screen design and projector placement in Maya as well, and then ported back into Vectorworks for construction drawings.

Can you speak about the production process, timings, schedule, things like that?

We did our first camera test in December of 2016, where we did a test between in-camera LED and in-camera projection and decided on going with the projection. In June of 2017, we went to London to install the Falcon cockpit and Dryden Vos’ (the villain’s) Space Yacht. For each of those, we were able to get down to three days of installation and alignment. When we did Oblivio, for example, it was 21 projectors, which was about 400 feet by 21 feet, and the tools that were available back then were not as good as they are now, so there were challenges to overcome in the installation process. With Solo, we were able to get the process down to those three days — so on a Monday we were dropping our first projectors, and by Thursday, camera testing was taking place.

Dryden was covered with the use of 15 projectors, 375 feet by 24 feet, all with those 0.36 lenses. The Falcon cockpit was a little more challenging, taking about a week’s worth of time. Because we designed a 180-degree curved screen, we had to get curved pipe made and rigged, that screen was about 60 feet by 30 feet. We used five of the 4K Panasonic projectors, which took about a week to load in. We had about six weeks of shooting for the Space Yacht main floor and study, and a week turnaround where we took everything out for the refit of the Space Yacht’s study, and reconfigured the screen — about 275 feet, and we used 11 projectors for that setup.

While all of that was going on, about six continuous weeks of shooting, we also set up LED wall on one of the other stages to get interactive lighting shots on weather days or for the speeder chase scenes. We used a lot of projectors on this project, at least 40 — 30 Panasonic 30K laser projectors for the main scenes, and ten or so for the closer quarters scenes.

What’s coming up for Lux Machina that you can talk about?

I can’t talk about anything in the film world without getting into trouble, of course, but we’ve got lots of projects on the horizon. In the television world, we have the Country Music Television awards coming up, the MTV Movie Awards, the Fox Upfronts, and long term, we’re looking to do the American Music Awards again — mostly the award show circuit and a bunch of corporate client work towards the end of the year. We’re definitely keeping busy.

The Benefits for Actors

One thing that became evident to me as I interviewed Phil Galler was that the work Lux Machina is doing not only enhances the lighting of the picture, but also gives the performers an actual environment in which to interact.

On the technical side, we get very involved in how we can make high-tech magic with a bevy of equipment, digital processes, and software, but we often forget that it is the actor who is telling a very large portion of the story.

So while much of the movie work that is done is cost quantified on how it adds to the overall look and feel of the picture, the award-winning performances that we hold in such high regard are often performed in front of green screens.

How do you quantify technology that gives the actors an actual environment in which to act? Lux Machina’s work literally places the actors inside the world with which they’re interacting, and allows them to tell the story not only individually, but as a group.

I don’t think that we consider this enough as an essential component of movie making and storytelling, but Lucasfilm and Lux Machina are turning the page and giving life to the background. —Jim Hutchison

VFX Immersive Environments Consulting Designers and Crew

- Design and Execution: Lux Machina Consulting

- VFX Media Server Project Manager: Philip Galler

- VFX Media Server Project Manager: Zach Alexander

- VFX Media Server Project Manager: Wyatt Bartel

- VFX Media Server Programmer: Jason Davis

- VFX Media Server Programmer: Kris Murray

- VFX Media Server Programmer: Jake Alexander

- Sweetwater Lead Projectionist: Jason Riggen

- Sweetwater Lead Projectionist: Jim Gold

- Projectionists: Kevin Evans, Dan Croft

- Sweetwater Accounts/President: Ron Drews

- Lead VER LED Tech: John “JR” Richardson

- VER Accounts: Ellie Johnson and Jonny Hunt