The terms we use in live production are often borrowed or adapted—much like our tools. There is probably an entire study that can be done on the way that the entertainment technology industry borrows, adapts, and iterates on top of broader trends in innovation. The way that both the intellectual and technical trends of society are filtered, processed, and reflected back by the entertainment industry. This is not that essay.

The term Extended Reality predates our current use cases much like the term Virtual Production has recently been expanded to incorporate LED volumes. The initial use of the term Extended Reality was in the context of philosophy but at some point, most likely the Milgram (Paul not Stanley) & Kishino paper in 1994 (www.bit.ly/Milgram_Kishino1994) the concept of a granular definition of experience ranging from unmediated “reality” at one end to a fully mediated “virtual reality” at the other end has taken root giving us a series of acronyms (AR, VR, XR, MR) that we now use to try to make sense of the ways that digital experiences are infiltrating our lives.

This is a good point to provide a very brief glossary of sorts. Please remember that these are my interpretations of these terms and that I do have an agenda.

Reality—This is the thing that our brain constructs that we all accept as the real world. The things are the things. The colors are the colors. We feel the wind coming off the ocean and the heat from a nearby fire. There are things that itch. This is sometimes called “consensus reality” because the people who are really into Extended Reality spend a lot of time pondering how our brains construct the waking hallucination that we refer to as “the real world”.

Virtual Reality (VR)—This is a very literal term delivering an environment that is virtually real. A Virtual Reality system sits in between a user and the real world and substitutes digital content for real content. This means that a Virtual Reality experience is explicitly fully immersive and functions by replacing reality. Ready Player One is probably the go to reference here.

Augmented Reality (AR)—The aim here is typically to enhance reality often utilizing “portals” or “lenses” that offer a digitally modified view of a real space. These add information or other stimuli that will supplement what the user is getting through their own senses. If someone took that meme where you can see the calculations floating in front of you in space and made it actually happen it would be AR.

Extended Reality (XR)—This covers everything from the lightest touches of Augmented Reality through to the most fully comprehensive Virtual Reality and includes everything in between.

Mixed Reality (MR)—Mixed Reality was one of several alternate terms for Augmented Reality. It diverged from AR over time and then fell out of favor. It assumes some level of mediated immersive experience that explicitly includes the user’s local built environment.

It is also worth noting that these definitions include sound, light, and anything else we can control (haptics) to more completely render and manage an experience.

Augmented Reality and Virtual Reality have more extensive and well-defined histories. Terms covering the different flavors of mediated reality such as Mixed Reality and Extended Reality fill in gaps and incorporate the subsections of the Virtuality Continuum that do not neatly fit into AR or VR. For example—there are multiple applications that use secondary displays and light sources to extend augmented reality and 3D displays to create more continuous and immersive experiences.

While we all agree that there is something to the world of “digital twins” and immersive production we do not, as an industry, use the terminology in a consistent manner. Part of the reason for this is that the tool kit we are using is not the product of a focused development effort arriving at a specific endpoint but rather the haphazard accumulation of tools and knowledge that have come from spatial computing research, special effects, VR development, 3D cave environments, video games, projection mapping, freeform interaction, and science fiction movies.

The very useful Virtual Production Glossary (www.bit.ly/VPGlossary) has a stub covering Extended Reality (XR) as an open-ended all-encompassing term and strangely I think that is precisely the correct way to frame it. But it is also the root cause of some confusion. Somehow Extended Reality is both everything and this one very specific thing that I will address below.

As a concept some aspects of this are addressed in architecture as Perceptual Space which is part of a broader definition of Visual Space (www.bit.ly/VisualSpace). This is an interesting tangent because it covers everything from elemental geometry to how our brain makes pictures. Human perception is at the center of these definitions and the study of how a person sees a volume of space flows out of how people learned to draw a space. This is yet another way that our narrow technical discussions about XR sit in the middle of many other parallel and/or intersecting conversations about how people use space.

What Do Other People Mean when They Say XR?

XR is most often used as an acronym for Extended Reality and as noted in the definition above broadly covers every possible combination of things within the virtuality continuum that are used to create a new SYNTHETIC SPACE thus allowing a user to partially or completely replace or enhance the real world.

One of the most familiar examples of this will be the use of AR on a smartphone where you are using your phone as a portal to overlay digital information over a space that you are currently in. When you use an iPhone to measure something you are engaging with a digital version of the space you are in that can give you some approximation of a measurement for a real physical thing that you are looking at. It does this by making a model of the space using sensor data along with your input on the two points being measured.

NVIDIA’s Omniverse XR (www.bit.ly/Omniverse_NVIDIA) is described as “an immersive spatial computing reference application.” It is worth noting that the term Spatial Computing does not show up in Google Ngram until 1990 just after the term Virtual Reality starts to gain currency. Since that time VR has shown up in waves in the consumer electronics market [Virtual Boy] while Spatial Computing has primarily been an academic term. But I would argue that Spatial Computing is largely synonymous with Extended Reality and this is an important link to make in understanding the scope of the opportunity.

One of the quirks of the virtuality continuum is that it is possible to have a real experience outside of the continuum—like when your iPhone battery dies—but it is not possible to have a purely virtual experience because you need a physical person. This in spite of academic papers that appear to show us two people having a meeting in digital space leading me to wonder if the researchers think TRON was a Ken Burns documentary.

Virtual Reality, much to my surprise, is one of the least tortured terms in the lexicon. We are all pretty sure it involves strapping a box to your face and looking at things that may or may not include video inputs of the room you are in and/or handheld controllers. You may or may not have legs. This all stands in contrast to the term Augmented Reality which is now used to refer to everything from futuristic contact lenses, eyeglasses, headsets/HMDs, smartphone applications, transparent displays, and applications that use a digital front plate in a live broadcast application. But each of these applications fits squarely within the scope of Augmented Reality as they fuse digital assets with reality either directly, in the case of glasses or phone use, or indirectly, in the case of broadcast cameras at a live event.

If you think the word “indirectly” is doing a lot of work in that last sentence, you are correct. What about the popular recent examples of people dropping CG giant animals, dragons, and sandwiches in the middle of a stadium as part of a live event? Is that really Augmented Reality when I am looking at it on the TV? What about the historic use of AR in sports to mark lines and track hockey pucks? Does it matter if the people at the event can see the fusion of those digital assets and their real environment? But if you think about this from the production people’s point of view—they can see the action happening on the field and they can see the camera output that merges a digital source with the live camera feed. So there is a purely digital version of this world somewhere that is being served up based on sensor data coming from the cameras and content from that server is then being composited into the frame and as the camera moves the content in the server moves. In some ways taking measurements with a smartphone and marking the line of scrimmage with a digital line are not that far apart. Both tasks require real time digital information about a volume of space in order to lay the assets into the real background.

Mixed Reality

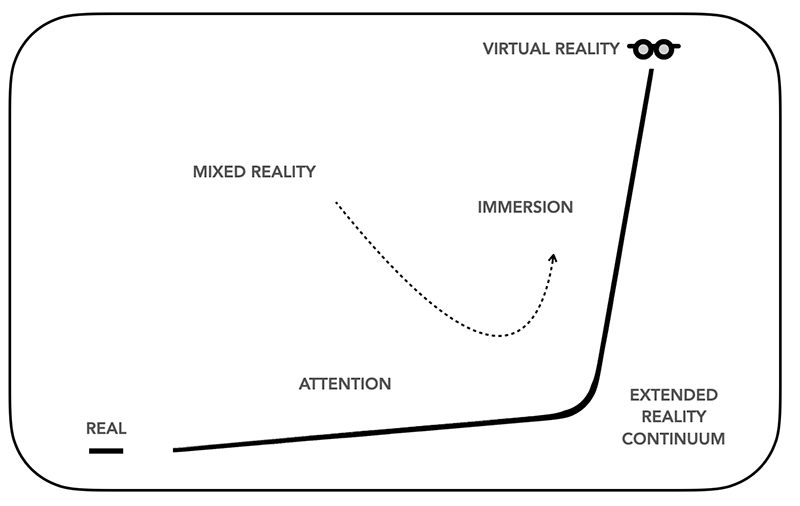

If the battery on your phone dying represents a hard line between consensus reality and extended reality, then we need to understand that there is a gray area between where unmediated consensus reality ends and the more identifiable aspects of Extended Reality begin. It has been referred to as a continuum since the beginning so we should not be surprised that there is a little ambiguity.

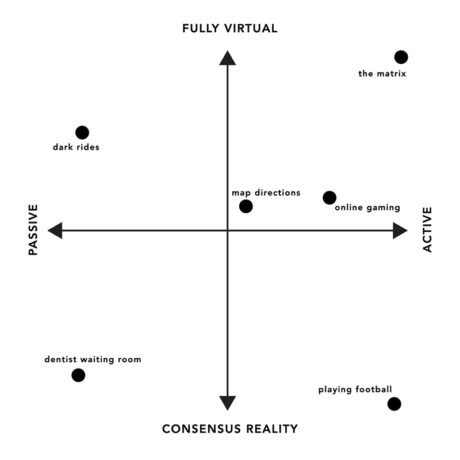

The act of engaging with an information display or other stimuli does not always translate to a level of immersion that we would associate with a different reality. A billboard in Times Square that is part of the general visual noise that you process when in that environment. A TV in the waiting area at the doctor’s office is not much different. As engaged as you might be with an episode of Detectorists you are looking at a screen and listening passively to the synced audio track that accompanies the visuals. There are many digital and analog experiences that are not a part of Extended Reality.

- Attention—These are the things that create distance between a user and reality without crossing into Extended Reality. Doom scrolling is a good example or the TV some of us watch to turn our brains off.

- Partial Immersion—This is the gray area between Attention and Immersion. Video games and other engaging content fit into this category in some cases. Haptics in game controllers, for example, create a tether between a user and an environment.

- Immersion—These applications put the user in the environment using multiple technologies to separate a user from the real world while creating a new world. This active negation of the real world can be complete or partial but some element of this is necessary to create these new experiences. Augmented Reality and Mixed Reality fall into this category.

Mixed Reality was originally conceived of as a subset of Virtual Reality. The Milgram & Kishino paper “A Taxonomy of Mixed Reality Visual Displays”refers to MR as a “subclass of VR related technologies that involve the merging of real and virtual worlds”. But that framing has drifted as VR became predominantly associated with head mounted displays and people in the field experimented with the different ways that light and audio can be used to add layers to immersive experiences.

Mixed Reality today can be seen as a broad term that employs all the tools available to create an experience that seamlessly merges real and digital elements into a single immersive experience. In this sense we can look back at the momentary obsession with projecting interactive content on floors in malls and locate that in the Attention end of the chart. But if that experience was completely immersive where the projection on the floor is accompanied by room light and the audio environment were fully integrated into a space that you navigated wearing Augmented Reality glasses—that would be Mixed Reality. Immersive AR driven laser tag in a project mapped volume where the real world and the digital overlay operate in a single XYZ coordinate space … Mixed Reality.

A range of applications [beyond laser tag] can be imagined where it is critical to fix visual and aural data in a common XYZ coordinate space relative to one or more individuals. Some of these applications may provide data for a single viewer so the system needs to understand their point of view at any given moment.

These types of applications will be made possible through the development of viable light field and super multiview displays and the ability to host systems that can perform the required sensor fusion locally. While information of a certain level of density may require a head mounted Augmented Reality display there are many things that we will be able to communicate ambiently using new types of displays.

At the inflection point between Attention and Immersion there are a range of other applications that exist within this merged coordinate space. Installations that integrate projection with LiDAR to manage interaction are a part of this space. These environments can also integrate spatialized audio along with atmospheric lighting to further immerse the audience in the Synthetic Space. Using the term Mixed Reality for these applications helps sharpen the definition of Virtual Reality and Augmented Reality for other applications.

Nils Porrmann has proposed an alternative chart for the space. This view is tied to engagement and whether the user is passive or active. How we are using a space is a central question and will define how technologies evolve to fill in these new requirements.

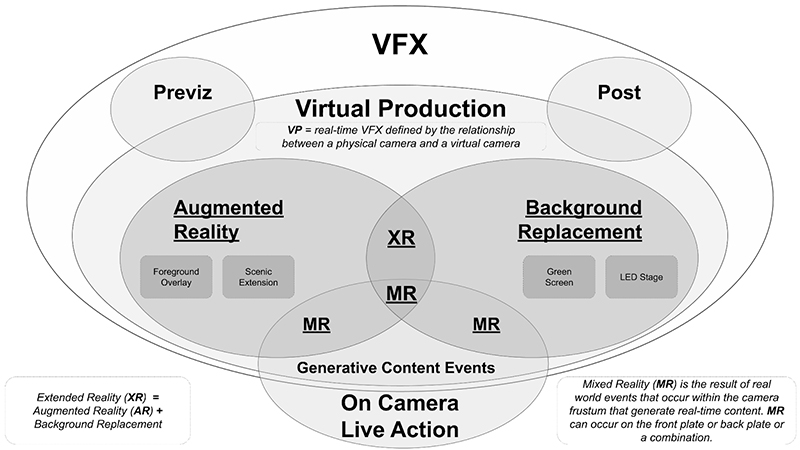

And Laura Frank has addressed this within the context of virtual production which she defines as a “real-time VFX defined by the relationship between a physical camera and a virtual camera.” This is an important definition since everything beyond that is additive to that core relationship between where the filmmakers are and the digital reality of the scene they are shooting.

What Do I Mean when I Say XR?

We currently use XR in the established context of in-camera visual effects (ICVFX) as a tool that builds on process shots and other in-camera trickery (insane split optic custom camera lenses). The use of this merged coordinate space developed in parallel in Virtual Production and Sports television (This all started in the 1990’s leading to real time overlays in sports and a string of Robert Zemeckis movies). In both cases the camera tracking data was used to integrate digital information into a shot at a later time. In sports the later time was milliseconds since this was optimized around sports broadcast. In film the camera tracking data might also include motion capture and was critical to creating digital assets and compositing them into a scene.

This technology was merged with the Process Shot a little later on. We all recognize one of the more basic forms of process shot whenever we watch a driving scene in an older film where the backgrounds have been filmed separately and have then been reprojected behind the car while the actors in the scene drive around Rome. The use of all this lens data combined with computers capable of real time rendering solved one of the drawbacks of those process shots allowing for parallax as the moving camera and the backplate stay in the same coordinate space allowing for the technology to be used in films like Solo and TV shows like The Mandalorian.

When I say XR today I am generally referring to the use of this technology in a broadcast environment where the sensor data and the real time rendering systems are combined with LED walls and mapped lighting to create a fully realized virtual world in camera in real time. The pandemic led to a burst of innovation in this space applying these technologies to broadcast applications.

I am not going to go into too many details since this is well addressed in these articles below. While these articles are a few years old at this point, they contain foundational information on broadcast XR.

- xR for Producers and Directors by Ashraf Nehru (www.bit.ly/XR_Nehru)

- Barriers to XR Adoption in Virtual Production by Laura Frank (www.bit.ly/XR_Frank)

- Mixed Reality Studios: A Primer for Producers Nils Porrmann et al (www.bit.ly/XR_Porrmann)

XR is one of the few places where the combination of real time sensors, lighting, LED video displays, and real time rendering systems can be accessed and utilized in a systematic manner. It is certainly possible to use these things somewhere else. A person who is sufficiently interested could take all the same components and build a consumer attraction. But the ICVFX end point in XR stages creates a portal through which we can understand these new Synthetic Spaces.

A Synthetic Space is a hybrid Extended Reality environment where the experience is a seamless combination of the real and the digital.

At some level this is an idealized space where information display, lighting, sound, reflection and other parameters are being controlled within a 3D environment in real time in response to how a space is being used and who is in that space. By 2030, we will have moved from personal computing to personal spaces.

Right now, XR is the best way to play in this space. The X is for experimental. The people who discover the emergent capabilities that we will desire in the future will likely discover them when playing with XR. And in this future, we will casually integrate elements of the real world with digital assets that are seamlessly mapped into that same XYZ coordinate space and we won’t even give it a second thought. And that is what I mean when I say XR.

Thank you for reading and I hope you enjoyed my XRticle.

End Note: What We Call Displays Matters

There is a long list of things that are not holograms or light field displays. A transparent LCD in a light box integrating content with a drop shadow is not a hologram. An eye tracking display that gives you a stereoscopic image is not a light field or a hologram. A really good lenticular display is not a hologram or a light field display. This is not to detract from the quality of those displays but more a note to say that companies that attempt to elevate themselves by making these claims do not necessarily have your best interest as a consumer at heart.

There is no such thing as a “direct view” LED display. There are LED displays and there are LCD displays. What you put behind an LCD does not alter the nature of the matrix of light valves and color filters that create the image. As an industry it is not our responsibility to make up for the flaws of one company’s marketing campaign. Can we please drop the “direct view” and just call these things what they were called before the popularization of consumer LCD televisions … which is LED displays.

Matthew Ward founded Tekamaki and co-founded Element Labs, the first company to successfully establish itself at the intersection of lighting and video, releasing products that defined an entirely new market segment. Ward is part of R&D at Fuse Technical Group and is Head of Product at Superlumenary, a product design and consulting company focused on the hardware development for the entertainment technology industry. He is also part of the leadership team of frame:work. This article is republished, having first been shared on the frame:work community website https://framework.video/.

Additional Resources

- A Taxonomy of Mixed Reality Visual Displays www.bit.ly/PLSN_MilgramKishino

- Interreality [Phys. Rev. E 75, 057201 (2007)—Experimental evidence for mixed reality states in an interreality system] www.bit.ly/PLSN_Interreality

- Mixed Reality Survey www.bit.ly/PLSN_MixedRealitySurvey

- Mediated Reality with implementations for everyday life www.bit.ly/PLSN_MediatedReality

- What You Need to Know About Universal Scene Description—From One of Its Founding Developers www.bit.ly/PLSN_UniversalSceneDescription

- The Fascinating History and Evolution of Extended Reality (XR)—Covering AR, VR and MR www.bit.ly/PLSN_HistoryXR

- Universal Studios puts real money into virtual stage https://lat.ms/442h3Xt

- The Virtual Production Glossary – There is no X in the Virtual Production Directory but you can find XR under Extended Reality https://bit.ly/VPGlossary

- Getting Started with LED on Camera, Brompton https://bit.ly/PLSN_LEDonCamera

- Future of Extended Reality https://bit.ly/PLSN_FutureXR

- Real-Time Video Content for Virtual Production, Mixed Reality and Live Entertainment by Laura Frank, Routledge 2022 https://bit.ly/LauraFrankBook2022