A Conversation about Lighting for eXtended Reality (XR) with Cory FitzGerald

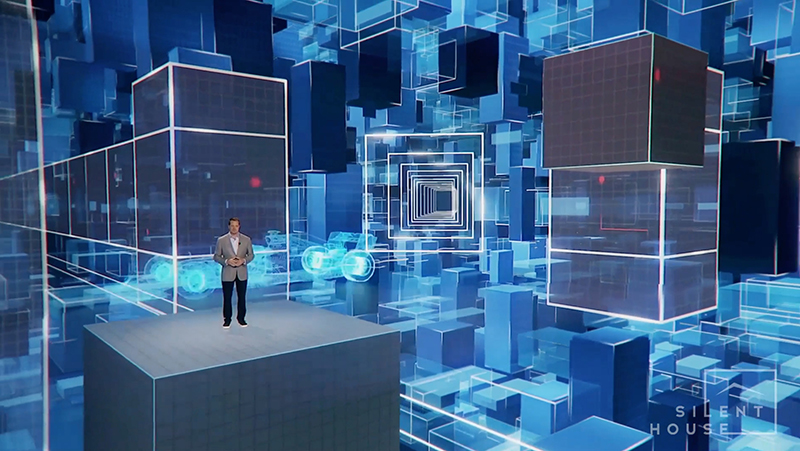

With time to explore new ways of working during the Covid-19 pandemic, top designers have been pushing forward with eXtended Reality (XR) innovations for presenting music performances, award broadcasts and corporate events. Over the coming months, PLSN will continue focusing on this cutting edge visual technology. This month we delve into XR with lighting and production designer Cory FitzGerald, a design partner with Silent House Productions and president of XR Studios.

Skills, Time and Balance

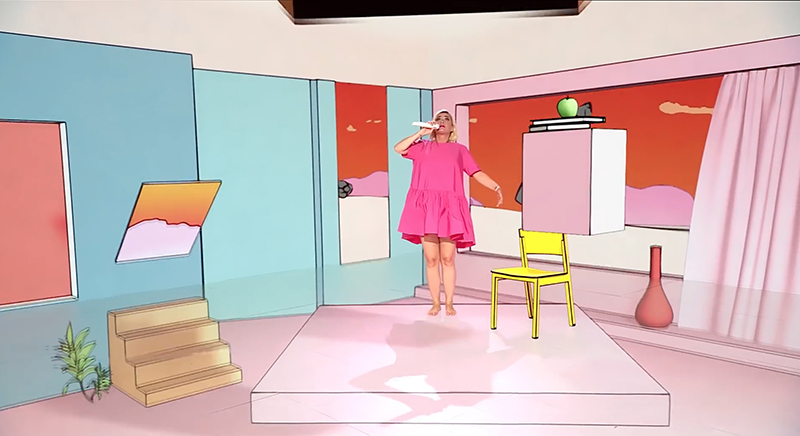

“I think XR is a really interesting medium. It’s very difficult, but in all the right creative ways,” FitzGerald comments. “It’s sort of a hybrid between theater, music videos, and filmic cinematography. You’re balancing between a camera system to match between the LED panels and the lighting—general white light on the people—and then the virtual extension matches the actual reality.”

FitzGerald notes the advantages that those with experience in film and TV lighting have for getting into XR lighting. “It’s more about what is the camera seeing,” he says. “I think the people that are very good right now who are able to jump right into XR lighting are the ones with a lot of broadcast and cinematography experience. The experience of being able to tell what the camera’s going to do, and how it’s going to react, and what shadows you want to keep, or get rid of; that kind of understanding. It’s more of a camera-based medium than a rock show or festival, for instance. XR is all about the final output. All the things in the room affect the output, so that’s really what your eyes have to be on the whole time, the output. It’s what makes a difference on the camera. If the performer moves 10 feet downstage or 10 feet upstage, and their level changes 100 foot-candles in that space, you need to account for that. You also need to understand that on a normal show, you’re shading the cameras to balance the iris to what’s going on, but here, the cameras are very specifically set to match the LED walls and the [virtual] extension. So lighting is essentially shading that level change and between those different looks. It all constantly comes down to pre-planning and rehearsing so that it looks right.”

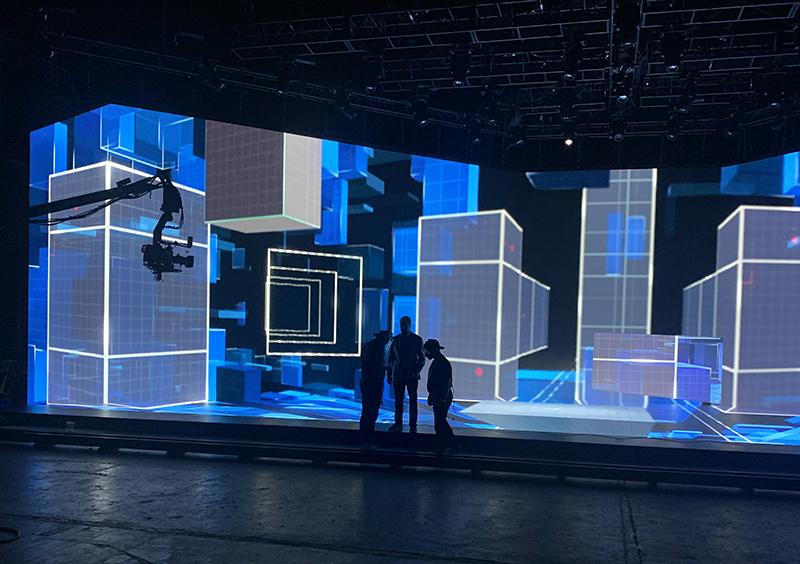

Planning and rehearsals are extremely important to lighting any successful XR project, whether live-to-broadcast or recorded. This is something that FitzGerald, like many working on XR projects, can’t stress enough. The constant refrain is to allow time to set up all the components including the lighting, rehearse every moment, and make sure it’s all working before you start to shoot. “The lighting, like every other part, takes time. That’s what we always tell people about XR,” states FitzGerald. “There’s a rehearsal process, and a lot of time is in pre-production. The more time you can allocate and dedicate to it, the smoother it will be. And that isn’t previz, because everything has to be in the XR space itself. It’s full production rehearsals on camera, camera blocking, or however you designate it. Then you can shoot it. You’re definitely going to need the time to finesse and adjust levels, with all the departments working together. Working in XR is definitely a more collaborative art form than any other thing I’ve seen so far.”

Lighting Considerations

As to some general thoughts an LD should keep in mind when lighting an XR project, FitzGerald gave us a few tips to share. “Every time you add a light into the real world, you change the general ambiance. You change the glow, the reflections — everything in the space could be affected — and you have to then adjust the [virtual] extension to match that. So, if you add a pink light in the real world that’s very ambient/washy, suddenly the screen looks pink, but the extension doesn’t. You have to match all that. It’s a delicate balance between shaping and angles, and where you’re coming from; what shadows you’re leaving behind. But you have to know — What does the color do? What does it do to the texture? Are you trying to match a real-life photorealistic scene? Or, are you trying to do something otherworldly and really crafting that in a much more black box theatrical way than what you’d be used to on, say a concert stage? On a concert stage, you’re lighting an environment where there’s a video content screen behind the performers. The two are visually connected in the way the camera see them, but you’re not making a magic trick happen, so the process of making the subject look good is much easier. Here it’s all a part of making the camera see them inside of the virtual space. In XR, you really are lighting that magic trick concept to make it work.”

It’s vital to know what you’re lighting, and that it’s the real-world lighting that’s lighting people and objects. “The division is very much real and not real,” he describes. “‘Not-real’ lights [those in the virtual content] don’t do anything in the real world, except maybe give some glow of color off the LED screen. The real lights have to actually illuminate the people, create shadows — or not create shadows — whatever you’re trying to do. That all has to come from real-world lighting. From the ‘not-real’ lighting, that color and that lighting effect will match only what’s in the virtual world, so you want to replicate that in the real world. If it’s a lighting moment, or if it’s a color combination, or some kind of gag that you’re trying to do, you want to match that in the real world to make the magic trick work.”

As any lighting designer will tell you, LED walls give off an ambient glow. In XR, that’s still true, and the lighting must account for that consideration. For the ambient glow of light and color from the virtual lighting to be incorporated into the real lighting, FitzGerald has used the LED walls themselves as well as in some designs added other LED panels or real sources to provide the reflected light from the virtual world. “We’ve done it both ways,” he says. “The LED screens inherently are bright enough to put off this light, but we’ve also put bright, low-res panels with the same type of content in front of the screen, to create reflections. That’s how a lot of virtual production works like The Mandalorian, where the screen is actually creating the sky or the reflection of something not actually in the room. As far as creating sources or soft boxes to match colors, that all comes from conventional fixtures that are built above or in front of the stage.”

When it comes time to choose the best source for the real lighting, FitzGerald states, “We have tried everything. I think it really comes down to the ambient reflections off things in the screen, so the washier the lights, the more chaotic it makes the scene, and problematic for matching the LED screens to the extension. What I’ve found works well is high CRI white light fixtures that have shutters and really controllable sources, so that you can throw light where you need it — and keep it off where you don’t.”

Working Process

As for the workflow, FitzGerald again comments that it is about the output, and one must remember that, in XR, it isn’t the audience but the camera that must be always considered. “You get the scenery onstage, you start looking at what it does, making sure it works on camera. Then, you start layering in lighting on top of that with a subject or a stand-in, or a prop, or whatever it’s going to be. You make sure that watching the monitor, you’re matching levels, you’re matching shadows, angles, choosing different spotlights, or choosing different color combinations, brightness levels. That’s all really dependent, I think, on the eye. I typically meter all the key light so that it’s matching, and then I go to the console, or the monitor, or work with the programmer to look at it. Then we make some decisions, like

‘That looks good. That’s too bright. That’s too far away.’ It really does change as the cameras all come into play, especially with multi-cam XR, which is what we’ve been doing at XR Studios. With every angle you’re going to get a different, basic brightness, or how far the cameras are away, how tight the lensing is; everything should be set to match at some point. Then, I find that you’re really keying and doing the shading with the lighting, as opposed to with the shader or with the iris of the camera. Every change effects everything else so you have to keep adjusting it all—and look at the monitor.”